President Joe Biden on Monday signed a wide-ranging executive order on artificial intelligence, covering topics as varied as national security, consumer privacy, civil rights and commercial competition. The administration heralded the order as taking ‘vital steps forward in the U.S.’s approach on safe, secure, and trustworthy AI.’

The order directs departments and agencies across the U.S. federal government to develop policies aimed at placing guardrails alongside an industry that is developing newer and more powerful systems at a pace rate that has many concerned it will outstrip effective regulation.

‘To realize the promise of AI and avoid the risk, we need to govern this technology,’ Biden said during a signing ceremony at the White House. The order, he added, is ‘the most significant action any government anywhere in the world has ever taken on AI safety, security and trust.’

‘Red teaming’ for security

One of the marquee requirements of the new order is that it will require companies developing advanced artificial intelligence systems to conduct rigorous testing of their products to ensure that bad actors cannot use them for nefarious purposes. The process, known as red teaming, will assess, among other things, ‘AI systems threats to critical infrastructure, as well as chemical, biological, radiological, nuclear and cybersecurity risks.’

President Joe Biden delivers remarks about government regulations on artificial intelligence systems during an event in the East Room of the White House, Oct. 30, 2023.

The National Institute of Standards and Technology will set the standards for such testing, and AI companies will be required to report their results to the federal government prior to releasing new products to the public. The Departments of Homeland Security and Energy will be closely involved in the assessment of threats to vital infrastructure.

To counter the threat that AI will enable the creation and dissemination of false and misleading information, including computer-generated images and ‘deep fake’ videos, the Commerce Department will develop guidance for the creation of standards that will allow computer-generated content to be easily identified, a process commonly called ‘watermarking.’

The order directs the White House chief of staff and the National Security Council to develop a set of guidelines for the responsible and ethical use of AI systems by the U.S. national defense and intelligence agencies.

Privacy and civil rights

The order proposes a number of steps meant to increase Americans’ privacy protections when AI systems access information about them. That includes supporting the development of privacy-protecting technologies such as cryptography and creating rules for how government agencies handle data containing citizens’ personally identifiable information.

However, the order also notes that the United States is currently in need of legislation that codifies the kinds of data privacy protections that Americans are entitled to. Currently, the U.S. lags far behind Europe in the development of such rules, and the order calls on Congress to ‘pass bipartisan data privacy legislation to protect all Americans, especially kids.’

The order recognizes that the algorithms that enable AI to process information and answer users’ questions can themselves be biased in ways that disadvantage members of minority groups and others often subject to discrimination. It therefore calls for the creation of rules and best practices addressing the use of AI in a variety of areas, including the criminal justice system, health care system and housing market.

The order covers several other areas, promising action on protecting Americans whose jobs may be affected by the adoption of AI technology; maintaining the United States’ market leadership in the creation of AI systems; and assuring that the federal government develops and follows rules for its own adoption of AI systems.

Open questions

Experts say that despite the broad sweep of the executive order, much remains unclear about how the Biden administration will approach the regulations of AI in practice.

Benjamin Boudreaux, a policy researcher at the RAND Corporation, told VOA that while it is clear the administration is ‘trying to really wrap their arms around the full suite of AI challenges and risks,’ much work remains to be done.

‘The devil is in the details here about what funding and resources go to executive branch agencies to actually enact many of these recommendations, and just what models a lot of the norms and recommendations suggested here will apply to,’ Boudreaux said.

International leadership

Looking internationally, the order says the administration will work to take the lead in developing ‘an effort to establish robust international frameworks for harnessing AI’s benefits and managing its risks and ensuring safety.’

James A. Lewis, senior vice president and director of the strategic technologies program at the Center for Strategic and International Studies, told VOA that the executive order does a good job of laying out where the U.S. stands on many important issues related to the global development of AI.

‘It hits all the right issues,’ Lewis said. ‘It’s not groundbreaking in a lot of places, but it puts down the marker for companies and other countries as to how the U.S. is going to approach AI.’

That’s important, Lewis said, because the U.S. is likely to play a leading role in the development of the international rules and norms that grow up around the technology.

‘Like it or not – and certainly some countries don’t like it – we are the leaders in AI,’ Lewis said. ‘There’s a benefit to being the place where the technology is made when it comes to making the rules, and the U.S. can take advantage of that.’

‘Fighting the last war’

Not all experts are certain the Biden administration’s focus is on the real threats that AI might present to consumers and citizens.

Louis Rosenberg, a 30-year veteran of AI development and the CEO of American tech firm Unanimous AI, told VOA he is concerned the administration may be ‘fighting the last war.’

‘I think it’s great that they’re making a bold statement that this is a very important issue,’ Rosenberg said. ‘It definitely shows that the administration is taking it seriously and that they want to protect the public from AI.’

However, he said, when it comes to consumer protection, the administration seems focused on how AI might be used to advance existing threats to consumers, like fake images and videos and convincing misinformation – things that already exist today.

‘When it comes to regulating technology, the government has a track record of underestimating what’s new about the technology,’ he said.

Rosenberg said he is more concerned about the new ways in which AI might be used to influence people. For example, he noted that AI systems are being built to interact with people conversationally.

‘Very soon, we’re not going to be typing in requests into Google. We’re going to be talking to an interactive AI bot,’ Rosenberg said. ‘AI systems are going to be really effective at persuading, manipulating, potentially even coercing people conversationally on behalf of whomever is directing that AI. This is the new and different threat that did not exist before AI.’

24World Media does not take any responsibility of the information you see on this page. The content this page contains is from independent third-party content provider. If you have any concerns regarding the content, please free to write us here: contact@24worldmedia.com

A Brief Look at the History of Telematics and Vehicles

Tips for Helping Your Students Learn More Efficiently

How To Diagnose Common Diesel Engine Problems Like a Pro

4 Common Myths About Wildland Firefighting Debunked

Is It Possible To Modernize Off-Grid Living?

4 Advantages of Owning Your Own Dump Truck

5 Characteristics of Truth and Consequences in NM

How To Make Your Wedding More Accessible

Ensure Large-Format Printing Success With These Tips

4 Reasons To Consider an Artificial Lawn

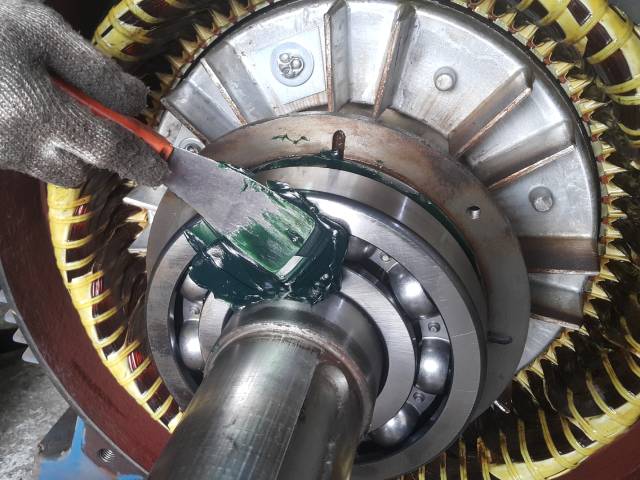

The Importance of Industrial Bearings in Manufacturing

5 Tips for Getting Your First Product Out the Door